This article was published as a part of the Data Science Blogathon.

Introduction

While trying to make a better predictive model, we come across a famous ensemble technique, known as Random Forest in Machine Learning. The Random Forest algorithm comes along with a concept of OOB_Score.

Random Forest, is a powerful ensemble technique for machine learning, but most people tend to skip the concept of OOB_Score while learning about the algorithm and hence fail to understand the complete importance of Random forest as an ensemble method.

This blog will walk you through the OOB_Score concept with the help of examples.

Table of Contents

- A quick introduction to the need for a Random Forest algorithm

- Bootstrapping and Out-of-Bag Sample

- Out-of-Bag Score

- Pros and Cons of Out-of-Bag Score

Pre-Requisites

Before we proceed I assume, we all are familiar with the following concepts. If not, you can click on the topics and then come back here, to proceed with OOB_Score.

- Decision Trees

- Hold-out Validation

- K-fold Cross-Validation

- The idea used behind the Random Forest algorithm

Let’s begin !!

1. Quick introduction to Random Forest

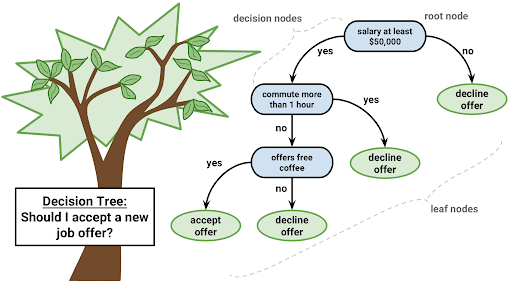

One of the best interpretable models used for supervised learning is Decision Trees, where the algorithm makes decisions and predict the values using an if-else condition, as shown in the example.

Though, Decision trees are easy to understand and in interpretations. One major issue with the decision tree is:

- If a tree is grown to its maximum depth(default setting), then it will capture all the acute details in the training dataset.

- And applying on testing data gives high error due to High Variance (overfitting of Training data)

Hence, to have the best of both worlds, that is less variance and more interpretability. The algorithm of Random Forest was introduced.

Random Forests or Random Decision Forests are an ensemble learning method for classification and regression problems that operate by constructing a multitude of independent decision trees(using bootstrapping) at training time and outputting majority prediction from all the trees as the final output.

Constructing many decision trees in a Random Forest algorithm helps the model to generalize the data pattern rather than learn the data pattern and therefore, reduce the variance (reduce overfitting).

But, how to select a training set for every new decision tree made in a Random Forest? This is where Bootstrapping kicks in!!

2. Bootstrapping and Out-of-Bag Sample

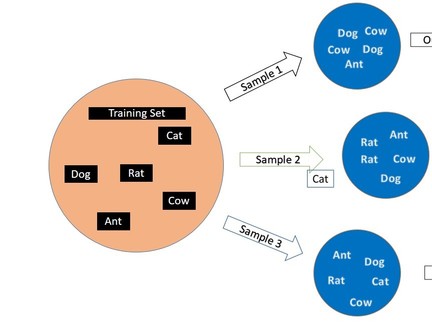

New training sets for multiple decision trees in Random Forest are made using the concept of Bootstrapping, which is basically random sampling with replacement.

Let us look at an example to understand how bootstrapping works:

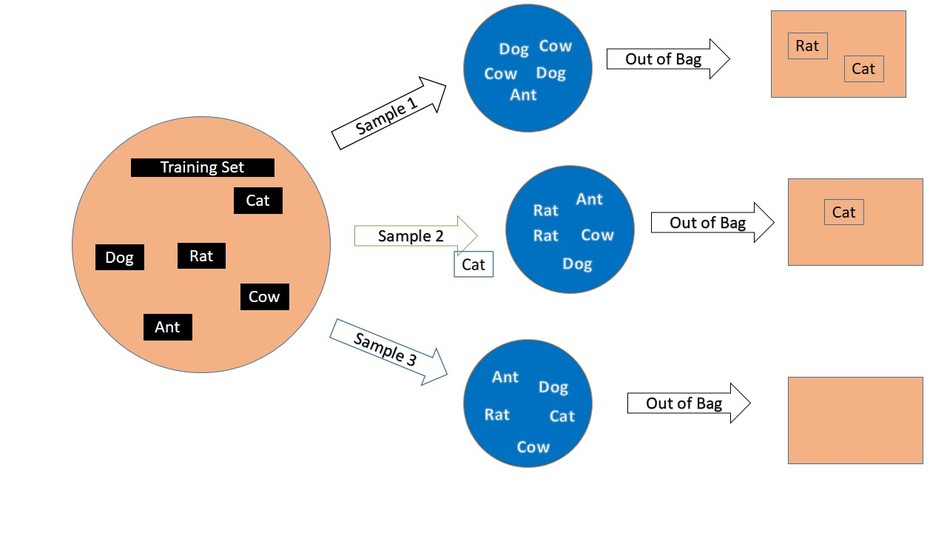

Here, the main training dataset consists of five animals, and now to make different samples out of this one main training set.

- Fix the sample size

- Randomly choose a data point for a sample

- After selection, keep it back in the main set (replacement)

- Again choose a data point from the main training set for the sample and after selection, keep it back.

- Perform the above steps, till we reach the specified sample size.

Note: Random forest bootstraps both data points and features while making multiple indepedent decision trees

Out-Of-Bag Sample

In our above example, we can observe that some animals are repeated while making the sample and some animals did not even occur once in the sample.

Here, Sample1 does not have Rat and Cow whereas sample 3 had all the animals equal to the main training set.

While making the samples, data points were chosen randomly and with replacement, and the data points which fail to be a part of that particular sample are known as OUT-OF-BAG points.

3. Out-of-Bag Score (OOB_Score)

Where does OOB_Score come into the picture?? OOB_Score is a very powerful Validation Technique used especially for the Random Forest algorithm for least Variance results.

Note: While using the cross-validation technique, every validation set has already been seen or used in training by a few decision trees and hence there is a leakage of data, therefore more variance. But, OOB_Score prevents leakage and gives a better model with low variance, so we use OOB_score for validating the model.

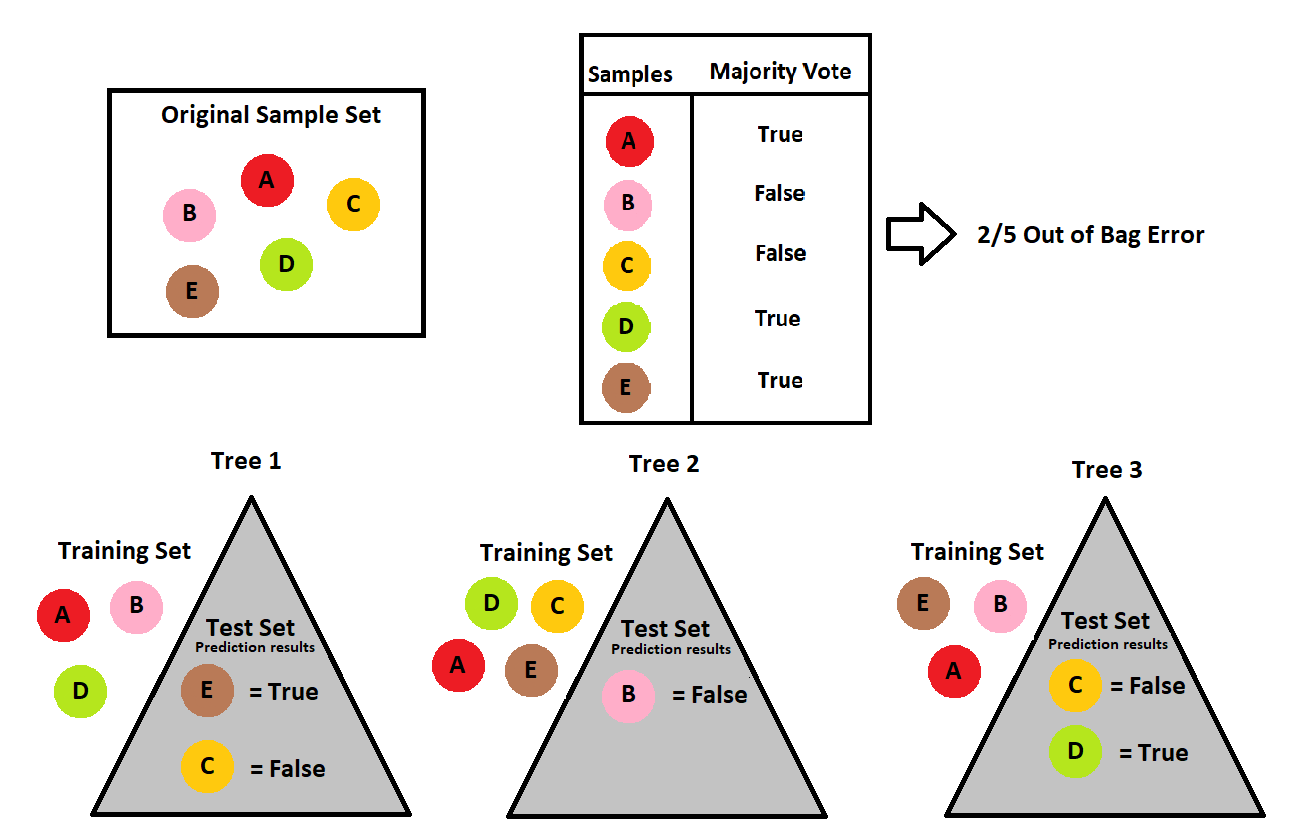

Let’s understand OOB_Score through an example:

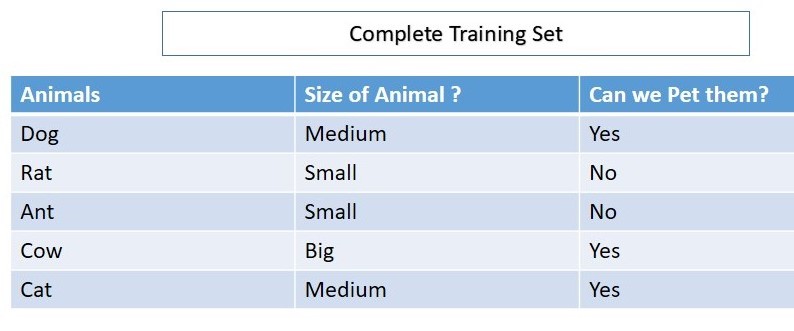

Here, we have a training set with 5 rows and a classification target variable of whether the animals are domestic/pet?

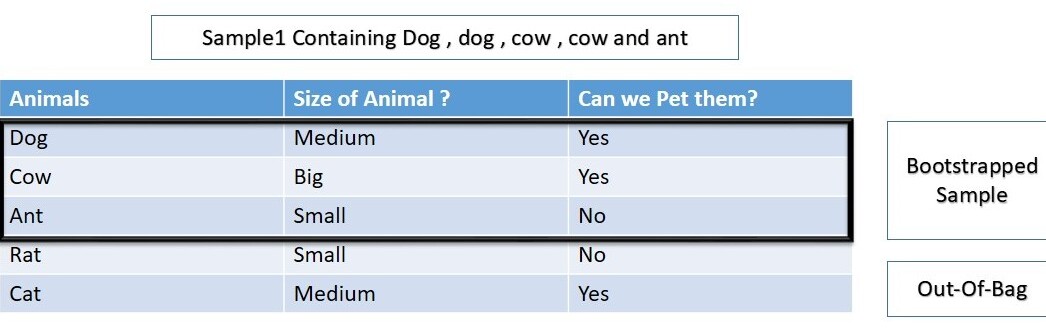

Out of multiple decision trees built in the random forest, a bootstrapped sample for one particular decision tree, say DT_1 is shown below

Here, Rat and Cat data have been left out. And since, Rat and Cat are OOB for DT_1, we would predict the values for Rat and Cat using DT_1. (Note: Data of Rat and Cat hasn’t been seen by DT_1 while training the tree.)

Just like DT_1, there would be many more decision trees where either rat or cat was left out or maybe both of them were left out.

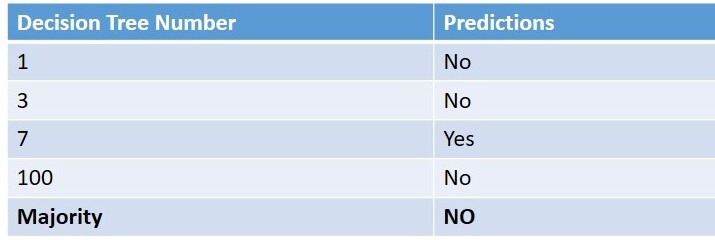

Say 3rd, 7th, and 100th decision trees also had Rat as an OOB datapoint, which means “Rat” data wasn’t seen by any of them, before predicting the value for Rat.

So, we recorded all the predicted values for “Rat” from the trees DT_1, Dt_3, DT_7, and DT_100.

And saw that aggregated/majority prediction is the same as the actual value for “Rat”.

(To Note: None of the models had seen data before, and still predicted the values for a data point correctly)

Similarly, every data point is passed for prediction to trees where it would be behaving as OOB and an aggregated prediction is recorded for each row.

The OOB_score is computed as the number of correctly predicted rows from the out-of-bag sample.

And

OOB Error is the number of wrongly classifying the OOB Sample.

4. Advantages of using OOB_Score:

- No leakage of data: Since the model is validated on the OOB Sample, which means data hasn’t been used while training the model in any way, so there isn’t any leakage of data and henceforth ensures a better predictive model.

- Less Variance : [More Variance ~ Overfitting due to more training score and less testing score]. Since OOB_Score ensures no leakage, so there is no over-fitting of the data and hence least variance.

- Better Predictive Model: OOB_Score helps in the least variance and hence it makes a much better predictive model than a model using other validation techniques.

- Less Computation: It requires less computation as it allows one to test the data as it is being trained.

Disadvantages of using OOB_Error :

- Time Consuming: The method allows to test the data as it is being trained, but the overall process is a bit time-consuming as compared to other validation techniques.

- Not good for Large Datasets: As the process can be a bit time-consuming in comparison with the other techniques, so if the data size is huge, it may take a lot more time while training the model.

- Best for Small and medium-size datasets: Even if the process is time-consuming, but if the dataset is medium or small sized, OOB_Score should be preferred over other techniques for a much better predictive model.

End Notes !!

Random Forest can be a very powerful technique for predicting better values if we use the OOB_Score technique. Even if OOB_Score takes a bit more time but the predictions are worth the time consumed in training the random forest model with the OOB_Score parameter set as True.

Thank you!!

I am always open to suggestions and you can contact me here.