Introduction

2017 has been a really exciting year for a data science professional. This is pretty evident from the new technologies that have been emerging day-by-day such as Face-ID which has revolutionized the way we secure information in our mobile phones. Self-driving cars had been a myth, but now they are very much a reality, the adoption of which can be seen by governments throughout the world.

Data science is a field wherein ground-breaking research is happening at a much faster pace, in comparison to any other emergent technologies ever before. The time between contemplating a research idea and actually implementing it has come down significantly. . This is also fueled by the immense amount of resources freely available to everyone – which essentially enables even a normal person to contribute to research in their own way. For example, GitHub (a collaborative platform for software development) is now paving the way for research ideas to be shared in an implementation format. As Andrew Ng said

Data is the new Oil

AI is the new Electricity

Personalization and Automation is the talk of the day and more and more industries such as Financial Services, Healthcare, Pharmaceuticals and Automotive are adapting to the developments being brought upon by better Machine learning / Deep Learning models. This article specially focuses on the defining moments of Data Science in 2017. We have kept a few criterion in mind when we curated the list, namely:

- As a data science professional, does the event affect you in any way?

- Does it influence your learning or your daily workflow?

- Is it an innovative startup, product release or recent development?

- Is it an industry collaboration which will impact the future of data science?

Also, we have shared our predictions in Data Science industry for the year 2018, which we believe would be really something to look forward to.

Enjoy!

Interesting Snippets of Year 2017

PowerBlox developed a scalable energy device capable of storing and distributing electricity from a variety of inputs

Tags – Startup, Renewable Energy

A young company PowerBlox is using algorithms to give energy grids “swarm intelligence.” Power-Blox gives grids “the ability to adapt automatically to fluctuating electrical loads and an ever-changing roster of power sources.” This technology is extremely important as we move to grids sourced by wind, solar, and wave energy that fluctuate minute-by-minute, thus making renewable sources of energy more usable.

Neuralink : A high bandwidth and safe Brain-Machine Interface

Tags – Startup, Innovation

2017 probably saw its biggest announcement from Elon Musk who announced creation of a company called Neuralink that aims at building high bandwidth and safe Brain-Machine interfaces. It looks like Elon has finally decided to bring Matrix into reality where learning new skills for example flying a helicopter is just a matter of plugging a wire into your neocortex. All this may sound fiction and over exaggerated but Elon has been known to make things real, take Tesla and SpaceX for example.

If it all comes true, humans may soon have the technology to study and map the brain in its entirety. This has far reaching implications to vastly improving healthcare to augmenting human capacity. And Elon seems serious too. The company recently received a $27 M funding and is looking to raise another $100M through its shares. For us mortal minds, open the link in the heading to understand how it all started and how the mission of Neuralink is grandeur enough to change the future of humanity if brought to reality.

Face Recognition for payment transaction in KFC China

Tags – Innovation, Retail Industry, Computer Vision

Alipay and KFC China are allowing customers to pay via facial recognition plus their phone

numbers. No cash, credit cards or smarts phones are necessary.This is the first retailer in the world to do so.

Tags – Product release, machine learning, open source software

Deeplearn.js an open source WebGL-accelerated JavaScript library for machine learning that runs entirely in a browser.

Software engineers Nikhil Thorat and Daniel Smilkov noted, “There are many reasons to bring machine learning into the browser. A client-side ML library can be a platform for interactive explanations, for rapid prototyping and visualization, and even for offline computation. And if nothing else, the browser is one of the world’s most popular programming platforms.”

Release of CatBoost: A machine learning library to handle categorical data automatically

Tags – machine learning, open source software

You have seen errors while dealing with categorical variables to build machine learning models using library “sklearn”, at least in the initial days “ValueError: could not convert string to float”. This error occurs when dealing with categorical (string) variables. In “sklearn”, you are required to convert these categories in the numerical format. In order to do this conversion, we use several pre-processing methods like “label encoding”, “one hot encoding” and others. “CatBoost”, a recently open-sourced library, developed and contributed by Yandex does this for you automatically.

There are many such open source tools/ libraries which have been released in the last year. This article captures a few of the most popular ones.

IBM Watson to aid in filing taxes

Tags – Company Collaboration, Finance

H & R Block, a tax preparation company is partnering with IBM Watson, to develop internal systems to help its employees’ to file customer’s taxes. The US tax code is 74,000 pages long which is difficult for any one person to know. IBM Watson will help tax professionals with prompts and questions to aid the tax interview process. At the end of this year’s tax process, this will also leave Watson with a massive library of tax data, which it can then analyze.

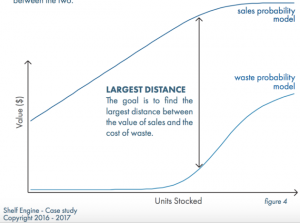

Shelf Engine: A startup developing AI to prevent food wastage

Tags – Startup, Food Industry

Shelf Engine is a startup developing robust models to help grocery stores enable their category managers to match orders of hundreds or thousands of products to demand. In the company’s case study, it explains how many managers often make orders based on their current waste numbers — a flawed method, because “that decision isn’t based on a cumulation of waste and deliveries.” Shelf Engine uses an order prediction engine and probability models that analyze historical order and sales data, gross margins, and shelf life information. The more a customer uses the system, the more accurate its recommendations become. The startup is backed by by Initialized Capital (Reddit co-founder Alexis Ohanian is a general partner), with participation from Founder’s Co-op, Liquid 2 Ventures (Joe Montana is a general partner), and others.

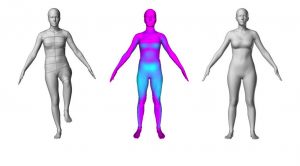

Body Labs – a start-up acquired by Amazon develops 3D models of individual human bodies from images

Tags – Company Acquisition, Fashion Retail

Body labs, a computer vision startup has developed applications that takes any input, whether that’s 2D photos, 3D scans or actual body measurements and predicts full, 3D visual body shapes. The implications of this built-out technology are massive, spanning not only commercial opportunities in fashion and apparel, but fitness, gaming, health and manufacturing. This could actually solve the fitting issues with the customers especially in the ecommerce which is marred by huge return requests due to size issues. Body Labs was founded by Michael Black, William J. O’Farrell, Eric Rachlin, and Alex Weiss who were connected at Brown University and Max Planck Institute for Intelligent Systems.

Data Science competition platform “Kaggle” joins Google Cloud

Tags – Company Acquisition

Google acquired Kaggle, a competition platform for data scientists in March, 2017. Kaggle is known for hosting data science and machine learning competitions as well. Google is claimed to have acquired Kaggle in an attempt to enhance the AI and machine learning functionalities and to take advantage of the 600,000 data scientists at Kaggle’s community. With this acquisition, Kaggle has continued to provide services as before, but the product enhancements seen in Kaggle platform has increased multifold. For example, “kernels” which are online coding environments are a lot smoother and provide a lot more functionality than before – such as longer runtimes.

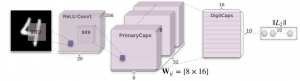

Capsule Networks – an improved deep learning architecture has been introduced

Tags – Research, Deep Learning

Geoffrey Hinton, one of the pioneers in deep learning, explains how capsule networks can be useful to improve over the traditional convolutional neural network architecture. If this technique is brought into application, it could easily beat the benchmarks of previous techniques until now.

Actually, this technique was also discovered previously – but it has now been implemented in a stable way and can be seen to perform better.

Canada bets big on artificial intelligence with AI institute

Tags – Industry collaboration

Under Geoffery Hinton, Canada Government and big companies like Google and Facebook has invest $150 milliion in Vector Institute to churn out 1000 graduates in AI every year. The Vector Institute intends propel Canada to the forefront of the global shift to artificial intelligence (“AI”) by promoting and maintaining Canadian excellence in deep learning and machine learning

Baidu trained an AI agent to navigate the world like a parent teaches a baby

Tags – Innovation, Robotics

Baidu taught an AI agent to navigate 2D space using only natural language, the same basic feedback mechanism a human parent uses with an infant. This is a progress towards building AI that can be taught in the same way as humans. Next target for the Chinese giant is to teach a physical robot navigate in a 3D space which is more realistic. This application based on reinforcement learning has huge implications for the robotics industry.

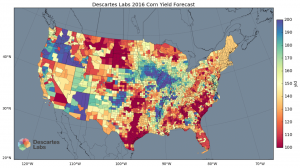

Machine learning creates living atlas of the planet

Tags – Startup, Food Management

Descartes Labs, a start-up in Mexico, uses satellite imagery and AI to predict food supplies and crisis-level food shortages months in advance. This leaves enough time to mount orderly humanitarian responses or optimize food supply networks. By processing these images and data via their advanced machine learning algorithm, Descartes Labs collect remarkably in-depth information such as being able to distinguish individual crop fields and determining the specific field’s crop by analyzing how the sun’s light is reflecting off its surface. After the type of crop has been established, the machine learning program then monitors the field’s production levels.

Disney understanding audience personality to better target them

Tags – Innovation, Behavioural Analytics

Disney Research’s Maarten Bos explains about how his team of behavioral scientists conducted a series of studies on how people react to targeted marketing using images and discusses the ways this information might be used within Disney and beyond. If done intelligently and diligently, this could revolutionize the way we do brand marketing.

“Entrupy” uses Deep learning to spot product authenticity

Tags – Startup, Computer Vision, Deep Learning

“Entrupy” is a start-up that uses computer vision algorithms to detect counterfeit products. They created a portable scanning device that instantly detects imitation designer bags by taking microscopic pictures that take into account details of the material, processing, workmanship, serial number, and wears/tear. It then employs the technique of deep learning to compare the images against a vast database that includes top luxury brands and if the bag is deemed authentic, users immediately get a Certificate of Authenticity.

Detecting heart disease using Deep Learning

Tags – Startup, Healthcare

Cardiogram, in partnership with University of California San Francisco, modified an Apple watch that can detect atrial fibrillation — the most common heart arrhythmia — with higher accuracy than previously validated methods. They achieved this feat using deep learning techniques. As soon as the disease is detected, the device could send you a notification: “We noticed an abnormality in your heartbeat. Want to chat with a cardiologist?” which can potentially decrease the time between the onset of the disease, its detection, and its care.

Facebook has decreased the training for visual recognition models

Tags – Innovation, Deep Learning

Every minute spent training a deep learning model is a minute not doing something else, and in today’s fast-paced world of research, that minute is worth a lot. Facebook published a paper this morning detailing its personal approach to this problem. The company says it has managed to reduce the training time of a ResNet-50 deep learning model on ImageNet from 29 hours to one.

IBM Watson automatically generated highlight reels of Wimbledon

Tags – Innovation, Journalism

Previously, the task of creating highlight packages and annotating photographs would be the responsibility of a human. But this year round, the job was placed in the hands of the Watson AI.

Watson can generate highlight packages without any human input. It can watch a video feed and identify the most pertinent parts of a match. This can be seen by players shaking hands, gesticulating in celebration, or something as simple as the levels of volume from the audience.

DeepMind created artificial agents that can imagine and plan ahead

Tags – Innovation, Reinforcement learning

DeepMind researchers created what they’re calling “imagination-augmented agents,” or I2As, that have a neural network trained to extract any information from its environment that could be useful in making decisions later on. These agents can create, evaluate and follow through on plans. To construct and evaluate future plans, the I2As “imagine” actions and outcomes in sequence before deciding which plan to execute. They can also choose how they want to imagine, options for which include trying out different possible actions separately or chaining actions together in a sequence.

Replika, a chatbot that creates a digital representation of you the more you interact with it

Tags – Innovation, Artificial Intelligence

Replika is a shadow-bot that tracks what you’re up to on your computer and mimics your style, attitude, and tendencies in order to text like you would. To give an example, the inventor used it to mimic the presence of a dearly departed friend.

Using Twitter as a tool to forecast crime

Tags – Predictive Analysis, Twitter Mining

University of Virginia Assistant Professor Matthew Gerber is using Twitter data to predict crime in order to give police a digital spotlight on geographical crime hotspots. He demonstrated that using some old forecasting models and new tweets, he was able to predict 19 of 25 types of crime. This is another step detect sentiment of people on social media and taking emergency precautionary measures.

HireVue is using AI to analyze word choice, tone, and facial movement of job applicants who do video interviews

Tags – Human Resources, Computer Vision, Natural Language Processing

HireVue is using AI in the human resources space for making hiring decisions. This company uses video from job interviews to assess candidate’s facial expressions, body language, tone of voice, and keywords to predict which applicants are going to be the best employees. This technology will completely revolutionize the HR industry

E&Y using Email and Calendar data to understand how employees work

Tags – Behavioural Analytics

Ernst and Young, one of the largest accounting firms in the US, is using a calendar and email data from its employees to identify patterns around who is engaging with whom, which parts of the organization are under stress, and which individuals are most active in reaching across company boundaries.

Carnegie Mellon’s ‘Superhuman AI’ bests leading Texas Hold’em poker professionals

Tags – Innovation, Game agents

CMU releases there a secret recipe of how they built a Superhuman AI that beats professional players at Poker. This is significant because no-limit Texas Hold’em is what’s called an “imperfect-information game,” which means that not all information about all elements in play is available to all players at all times. That’s in contrast to games like Go and Chess, both of which feature a board which contains all the pieces in play, plainly visible to both competitors.

Deep Learning to emulate cyber threats

Tags – Innovation, Cyber Security, Deep Learning

Scientists have harnessed the power of artificial intelligence (AI) to create a program that, combined with existing tools, figures out passwords. The work could help average users and companies measure the strength of passwords, says Thomas Ristenpart, a computer scientist who studies computer security at Cornell Tech in New York City but was not involved with the study. “The new technique could also potentially be used to generate decoy passwords to help detect breaches.”

Mozilla’s releases Speech Recognition model and Voice dataset

Tags – Product Release, Speech Recognition, Deep Learning

To speed up advancements in the audio domain, Mozilla has released the worlds second largest publicly available voice dataset, along with open sourcing the cutting edge technology of speech recognition. This release will certainly affect the advancements of speech recognition in general.

Course content for Fast.ai’s “Cutting Edge Deep Learning for Coders – Part 2”

Tags – Deep Learning

Videos and course content for Course “Cutting Edge Deep Learning for Coders – Part 2” is now available for general public. For those who haven’t had a chance to see Part 1 of the course – the course introduces you to basics of Deep learning in a practical manner. Part 2 enables you to get into the details of Deep Learning and introducing you to the cutting edge research that is going on in the industry

China will allow self-driving cars to be tested on public roads

Tags – Self-driving cars, Transportation

China is opening up its roads to self-driving cars. The Beijing Municipal Transport Commission released a statement saying that on certain roads and under certain conditions, companies registered in China will be able to test their autonomous vehicles.

Emergence of Automated Machine Learning

Tags – Automated Machine Learning

Automated Machine Learning is the new deal. It does most of the heavy lifting required to complete a data science lifecycle on its own. This is a very powerful idea; while we previously have had to worry about tuning parameters and hyperparameters, automated machine learning systems can learn the best way to tune these for optimal outcomes by a number of different possible methods.

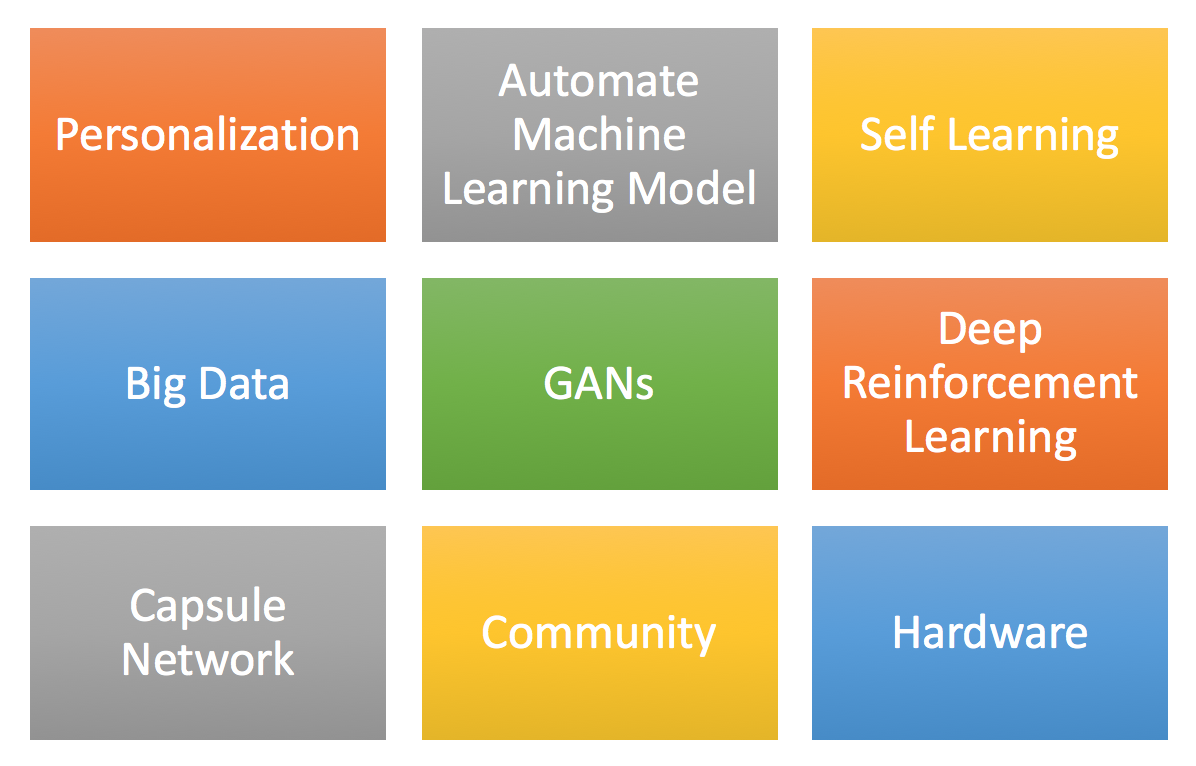

What more can we expect in 2018?

There is so much happening in the industry that its difficult to keep up with the current trends. And it will continue to grow, as soon as the cutting-edge research is transformed into use for the ordinary man. To give an example, you can see the impact that deep learning research has done to computer vision, where we see applications like Face-ID, self-driving car just around the corner. In the future, you will certainly see a boom in the applications that can be driven by deep learning techniques.

A few things that I am particularly looking forward to,

- Will Hinton’s Capsule Networks be the next big deal in the deep learning scene?

- Research in data science will become more and more community driven

- Tools will become simpler to use, with most of the manual details being automated.

- It will become evident to put ethical rules and regulations in place for artificial agents – especially in transportation

- Hardware will be cheaper and more efficient – with a tendency to go to AI enabled chips

- Learning-wise the focus will shift to self-learning, as availability of open courses increase

- You can see automated machine learning will become valuable

- GANs will become more functional and industry will start using GAN

- Deep Reinforcement Learning is the most general purpose of all learning techniques, so it can be used in the most business applications.

- You can expect a development towards making a black box model explainable

- There would be lots of data sources like IoT devices, CCTV, Social Media and others and these information will help us to build better and automated systems.

As Andrej Karpathy, an eminent personality in data science industry explains,

Neural networks are not just another classifier, they represent the beginning of a fundamental shift in how we write software. They are Software 2.0. Software 2.0 is not going to replace the software we know now, but it is going to take over increasingly large portions of what software is responsible for today.

End Notes

This article list down a few of the groundbreaking events that have happened in the year 2017 in data science industry, and the implications they have on a data science professional. It also shows a path that can be seen by these advancements in the near future.

If you know a ground-breaking event and want to share it with the community, please do write your comment below.