Introduction

The data scientist in me is living a dream – I can see top tech companies coming out with products close to the area I work on.

If you saw the recent Apple iPhone X launch event, iPhone X comes with some really cool features like FaceID, Animoji, Augmented Reality out of box, which use the power of machine learning. The hacker in me wanted to get my hands dirty and figure out what it takes to build a system like that?

When probed further, the answer was CoreML which is Apple’s official machine learning kit for developers. It works with iPhone, Macbook, Apple TV, Apple watch, in short, every Apple device.

Another interesting announcement was that Apple has designed a custom GPU and an advanced processing chip A11 Bionic with a neural engine optimised for Machine Learning in the latest iPhones.

With a powerful computing engine at its core, the iPhone will now be open to new avenues of machine learning and the significance of CoreML will only rise in the coming days.

By end of this article, you will see what Apple CoreML is and why it is gaining the momentum. We will also look into implementation details of CoreML by building a message spam classification app for iPhone.

We will finish off the article by objectively looking at pros and cons of the same.

Table of Contents

- What is CoreML?

- Setting up the system

- Case Study: Implementing a spam message classifier app for iPhone

- Pros and Cons of using CoreML

1. What is CoreML?

Apple launched CoreML this year in their annual developer conference WWDC(which is equivalent of Google I/O conference) with a lot of hype. In order to better understand CoreML’s role, we have to know a bit of context.

A little context for CoreML

Interestingly this is not the first time that Apple has come out with a framework for machine learning on its devices. Last year it launched a bunch of libraries for the same:

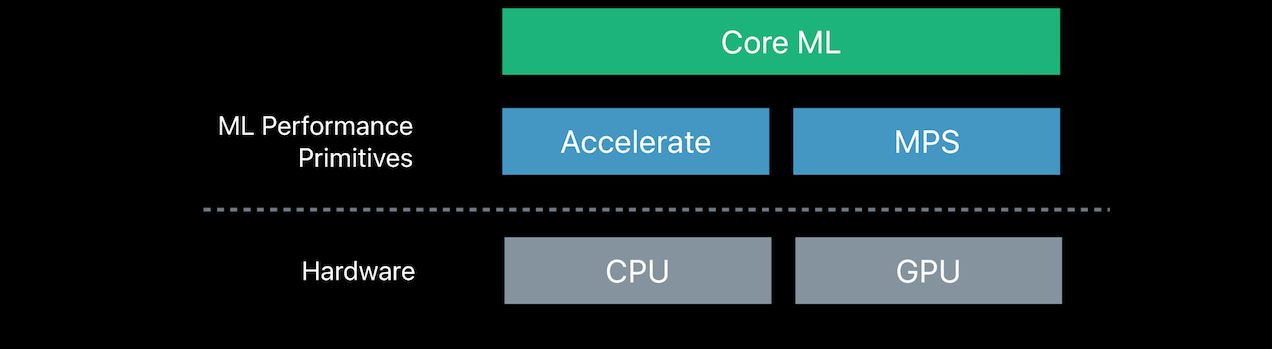

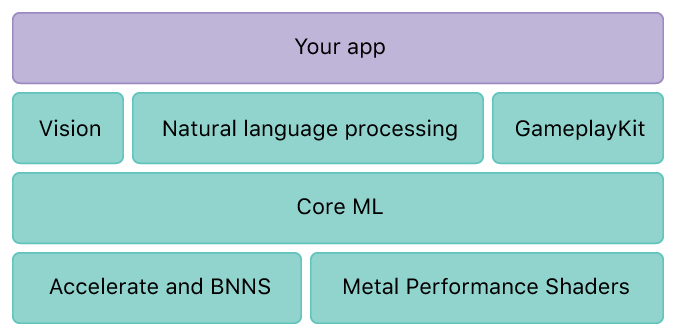

- Accelerate and Basic Neural Network Subroutines (BNNS) – Efficiently utilize CPU for predictions using Convolutional Neural Networks.

- Metal Performance Shaders CNN (MPSCNN) – Efficiently utilize GPU for predictions using Convolutional Neural Networks.

The point of difference was, one was optimized for CPU while the other was optimized for the GPU. The reason for this is sometimes during inference the CPU can be faster than the GPU. While during training almost every time GPU is faster.

These multiple frameworks created a lot of confusion among developers and since they were quite close to the hardware(for high performance), they were difficult to program in.

Enter CoreML

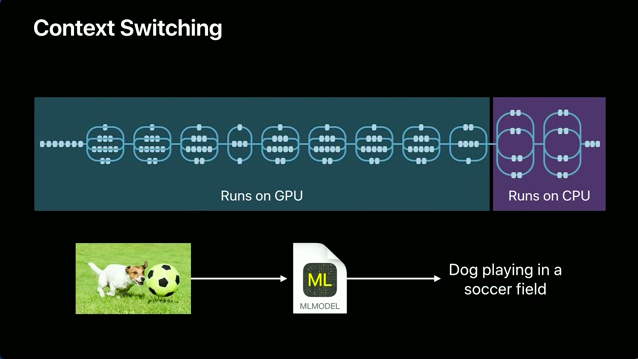

CoreML provides another layer of abstraction over the previous two libraries and gives an easy interface to achieve the same level of efficiency. Another benefit is that CoreML takes care of context switching between the CPU and GPU itself while your app is running.

That is to say, for example, you have a memory heavy task that involves dealing with text (natural language processing), CoreML will automatically run it on the CPU whereas if you have compute heavy tasks like image classification, it will use the GPU. If you have both the functionalities in your app, it will also take care of that automatically so that you can get best of both the worlds.

What else does CoreML provide?

CoreML also comes with three libraries built on top of it :

- Vision: A library that provides high-performance image analysis and computer vision techniques to identify faces, detect features, and classify scenes in images and video.

- Foundation (NLP): As the name suggests it is a library that provides functionality natural language processing.

- Gameplay Kit: A library for game development also provides AI for the same, uses decision trees.

All of the above libraries, again are very easy to use and provide a simple interface to do a bunch of tasks. With the above libraries, the final structure of CoreML would look something like this

Notice that the above design gives a nice modular structure to your iOS application. You have different layers for different tasks and you can make use of them in a variety of ways (for example, using NLP with image classification in your app). You can read more about these libraries here : Vision, Foundation and GameplayKit. Well, that was enough theory for now, it is time to get our hands dirty!

2. Setting up the System

To make full use of the CoreML , you need the following requirements set up:

- OS : MacOS (Sierra 10.12 or above)

- Python 2.7 and pip : You can download python for mac from here. To install pip, open your terminal and use the following code

sudo easy_install pip

- coremltools : This package helps in converting your models from python to a format that CoreML can understand. To install this again open your terminal and do

sudo pip install -U coremltools

- Xcode 9 : This is the default software used to build apps for Apple devices. You can download it from here. In order to download Xcode you will have to login using your Apple ID first.

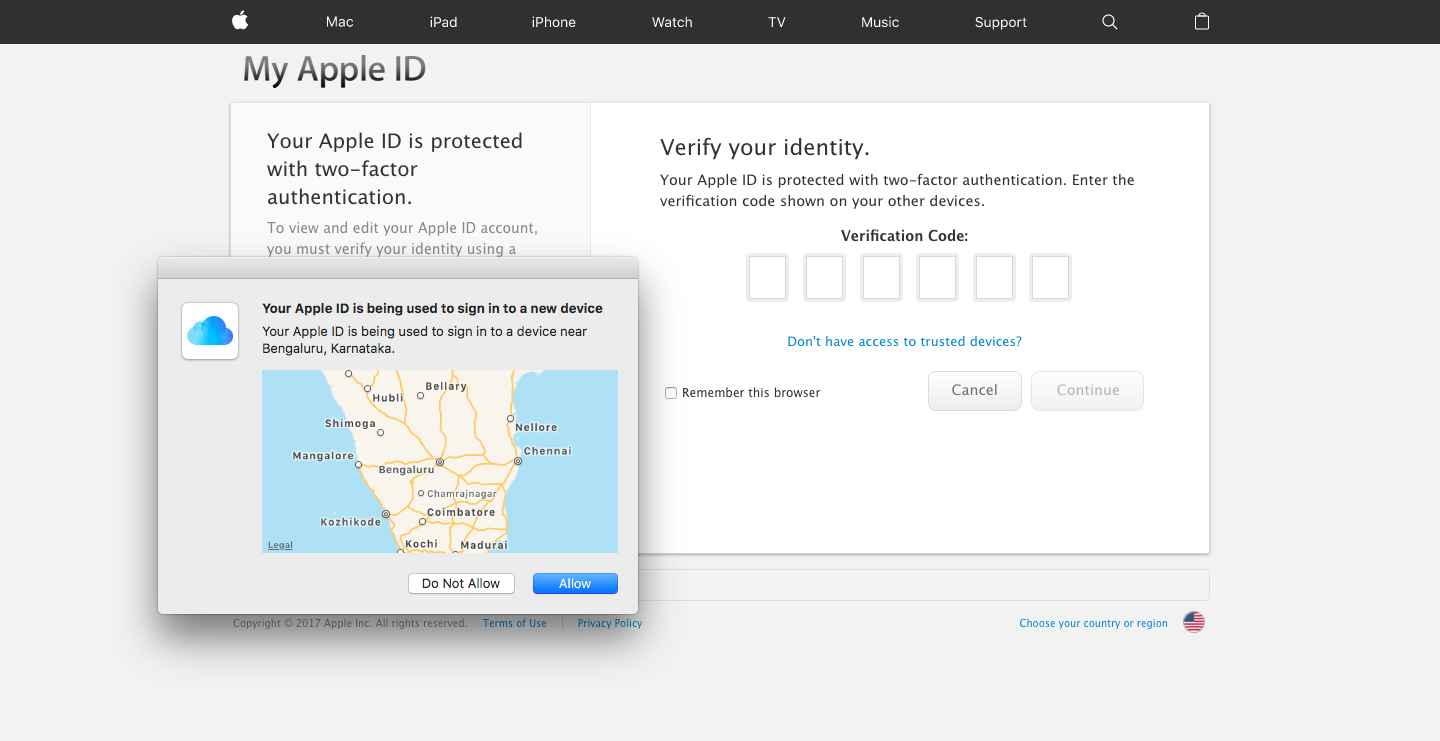

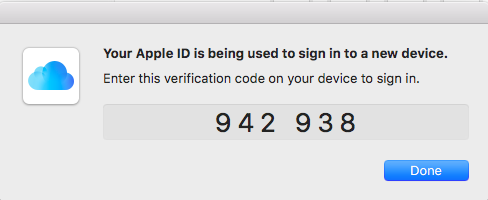

Once you log in, you will have to verify your apple ID. You will receive the notification regarding the same on the device that is registered with your apple ID.

Select “Allow” and type the given 6 digit passcode in the website

Once you do this step, you will be shown a download option and you can download Xcode from there. Now that we have set up our system and all ready let’s move on to the implementation part!

3. Case Study: Implementing a spam message classifier for iPhone

We will be looking at two important ways to utilize the power of CoreML by building them. Let’s start then!

Converting your machine learning model into CoreML format

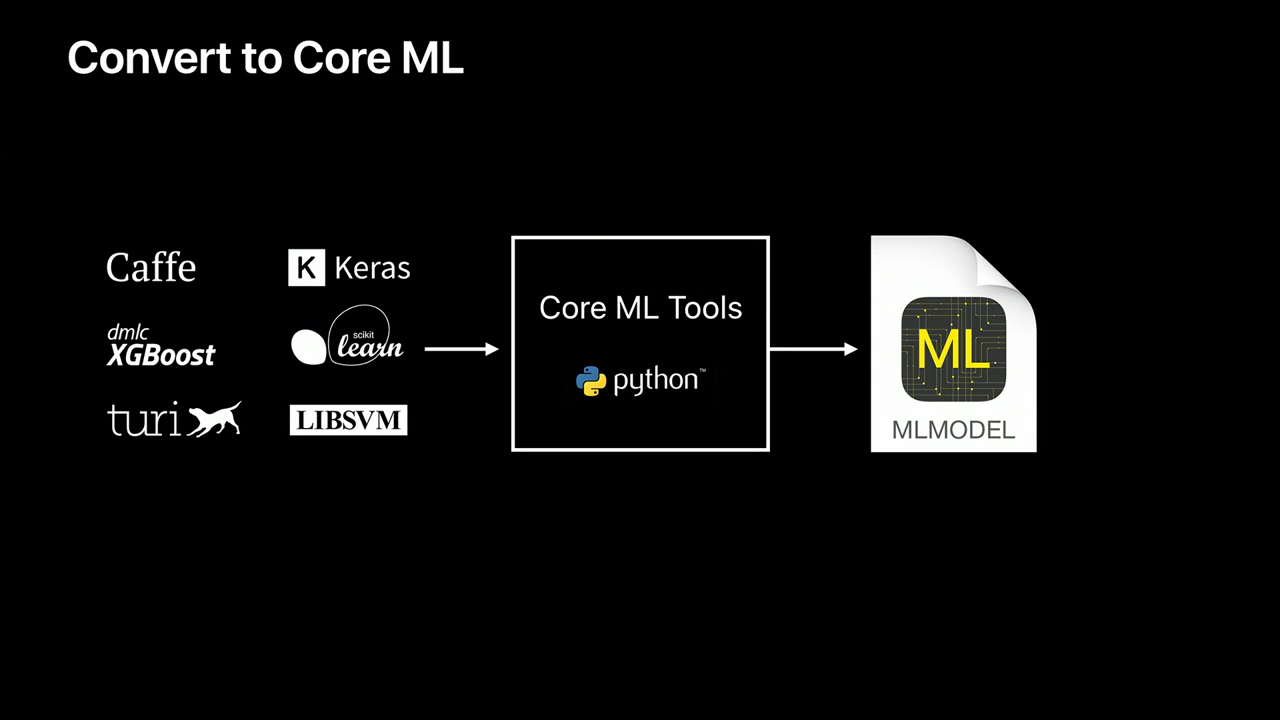

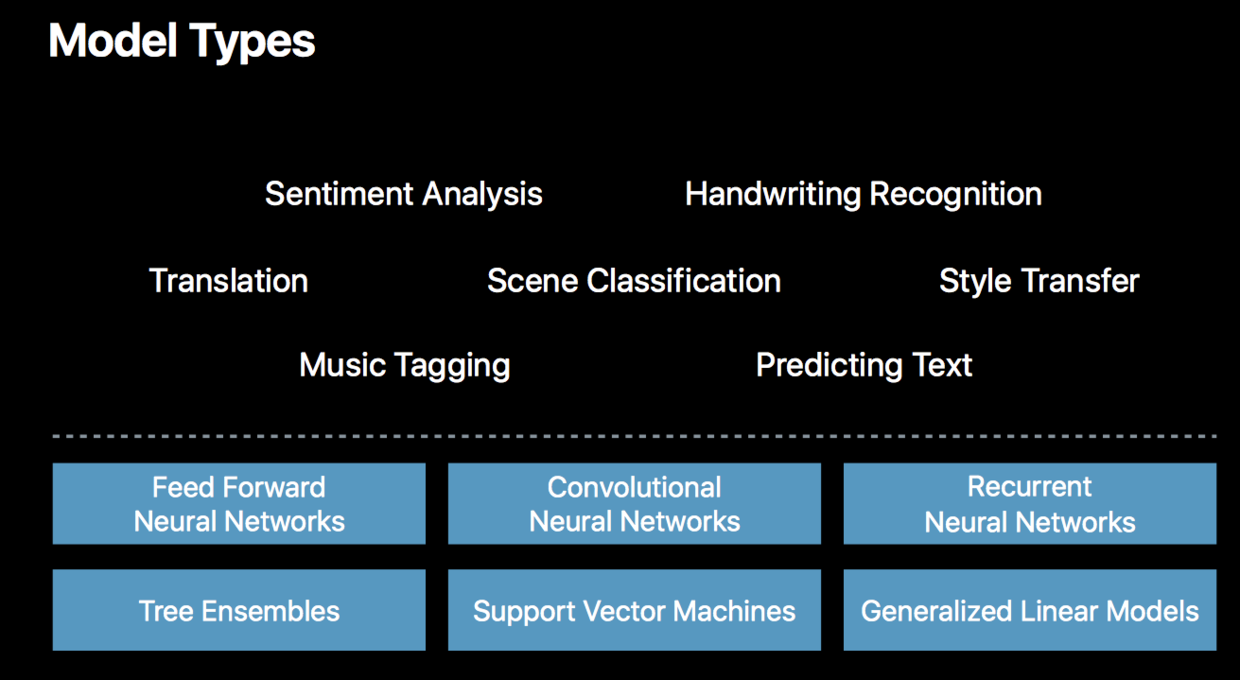

Now, one of the strengths of CoreML or rather should I say wise decision of its creators was to support conversion of trained machine learning models built in other popular frameworks like sklearn, caffe, xgboost etc.

This didn’t alienate the data science community from trying out CoreML because they can experiment, train their models in their favourite environment and then simply import it to use in their iOS/MacOS app.

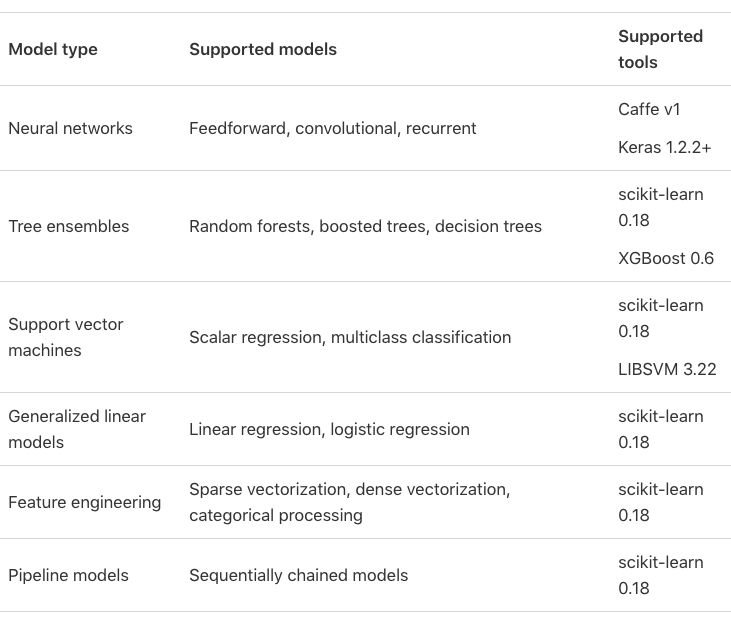

The following are the frameworks that CoreML supports right out of the box:

What is mlmodel?

In order to make the conversion process simple, Apple designed its own open format for representing cross framework machine learning models called mlmodel. This model file contains a description of the layers in your model, the inputs and outputs, the class labels, and any preprocessing that needs to be done on the data. It also contains all the learned parameters (the weights and biases).

The conversion flow looks like this :

- Train model in your favorite framework

- Convert the model to .mlmodel using coremltools python package

- Use the model in your app

For this example, we will be building a spam message classifier in sklearn and then port the same model to CoreML.

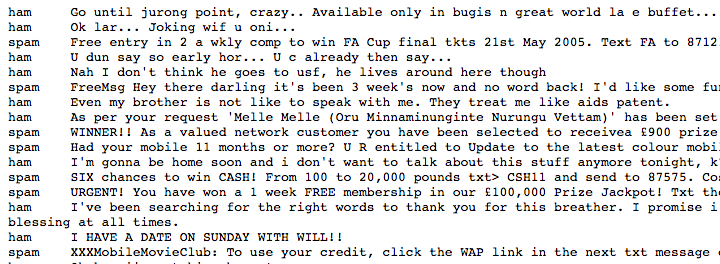

About Spam Collection dataset

The SMS Spam Collection v.1 is a public set of SMS labeled messages that have been collected for mobile phone spam research. It has one collection composed by 5,574 English, real and non-encoded messages, tagged according to being legitimate (ham) or spam.

You can download the dataset from here.

Build a basic model

We will be building a basic model using LinearSVC in sklearn. Also, I have used TF-IDF on the text of the message as a feature for the model. TF-IDF is a technique used in natural language processing that helps classify documents based on words that uniquely identify a document over other. If you would like to learn more about NLP and tf-idf, you can read this article. This would be the code for that:

import numpy as np import pandas as pd #Reading in and parsing data raw_data = open('SMSSpamCollection.txt', 'r') sms_data = [] for line in raw_data: split_line = line.split("\t") sms_data.append(split_line) #Splitting data into messages and labels and training and test sms_data = np.array(sms_data) X = sms_data[:, 1] y = sms_data[:, 0] #Build a LinearSVC model from sklearn.feature_extraction.text import TfidfVectorizer from sklearn.svm import LinearSVC #Build tf-idf vector representation of data vectorizer = TfidfVectorizer() vectorized_text = vectorizer.fit_transform(X) text_clf = LinearSVC() text_clf = text_clf.fit(vectorized_text, y)

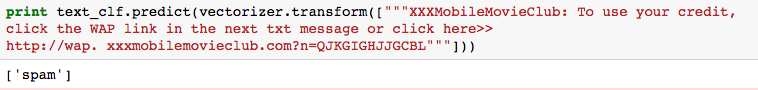

That builds our model. Let’s test it with a spam message,

#Test the model print text_clf.predict(vectorizer.transform(["""XXXMobileMovieClub: To use your credit, click the WAP link in the next txt message or click here>> http://wap. xxxmobilemovieclub.com?n=QJKGIGHJJGCBL"""]))

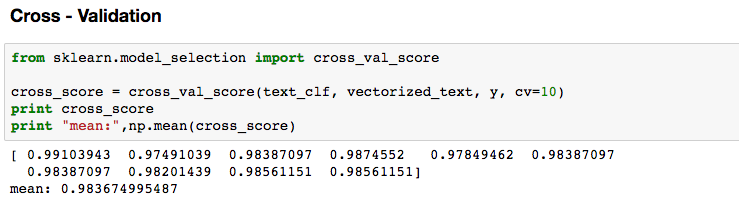

Interesting, our model works well! let’s add some cross-validation too,

#Cross - Validation from sklearn.model_selection import cross_val_score cross_score = cross_val_score(text_clf, vectorized_text, y, cv=10) print cross_score print "mean:",np.mean(cross_score)

Now that we have built our model, we need to port it to .mlmodel format in order to make it compatible with CoreML. We will use the coremltools package that we installed earlier for that. The following code would convert our model into .mlmodel format

import coremltools #convert to coreml model coreml_model = coremltools.converters.sklearn.convert(text_clf, "message", "spam_or_not") #set parameters of the model coreml_model.short_description = "Classify whether message is spam or not" coreml_model.input_description["message"] = "TFIDF of message to be classified" coreml_model.output_description["spam_or_not"] = "Whether message is spam or not" #save the model coreml_model.save("SpamMessageClassifier.mlmodel")

What happened here?

We first import the coremltools package in python. Then we use one of the converters to convert our model, in this case, we used converters.sklearn because we have to convert a model built in sklearn. We then pass the model object, input variable name, and the output variable name in .convert(). We then set the parameters of the model to add more info about the inputs, outputs and finally call .save() to save our model file.

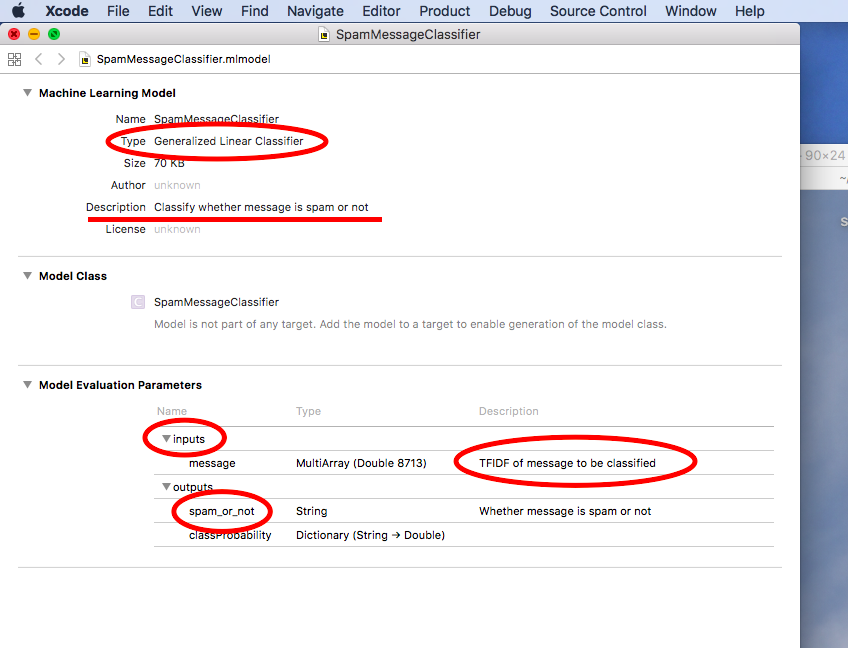

When you double click on the model file, it should open in a Xcode window.

As you can see, the model file shows details about the type of model, its inputs, and outputs, their types etc. I have highlighted all this information in the above figure. You can match the description with the ones we provided while converting to .mlmodel.

That is how easy it is to import your model into CoreML. Now your model is into Apple ecosystem, that’s when the real fun starts!

Note: The complete code file for this step can be found here. Read more about coremltools here and different types of converters available here.

Integrating the model with our app

Now that we have trained our model and ported it to CoreML, let us use that model and build a spam classifier app for iPhone!

We would be running our app on a simulator. A simulator is a software that shows how an app will look and work as if it was really running on the phone. This saves a lot of time because we can experiment with our code and fix all bugs before trying the app on an actual phone. Have a look at what the end product would be like:

Downloading the project

I have already built a basic UI for our app and it is available on GitHub. Use the following commands to get it up and running:

git clone https://github.com/mohdsanadzakirizvi/CoreML-on-iPhone.git cd CoreML-on-iPhone/Practice\ App/ open coreml\ test.xcodeproj/

This will open our project using Xcode.

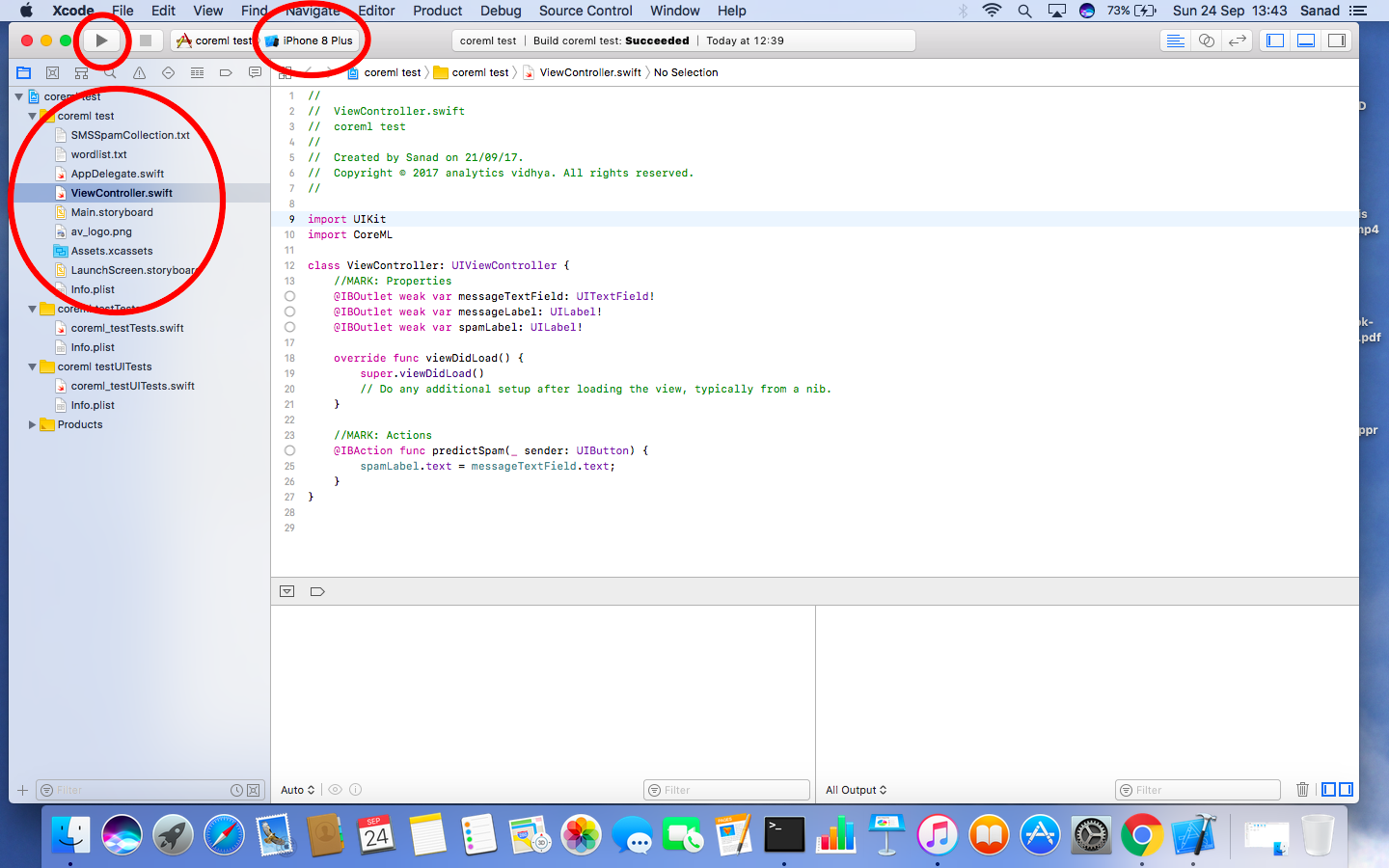

I have highlighted three main regions in the Xcode window :

- The play button on the top left is used to start the app on the simulator.

- Just below the play button are the files and folders related to our project displayed. This is called the project navigator it helps you navigate between files and folder of your project.

- Next, to the play button, iPhone 8 Plus is written this denotes the target device you want to test your simulator for. You can click on it and select iPhone 7 from the drop down

Let’s first run our app and see what happens. Click on the play button on the top left that will run our app in the simulator. Try typing some text in the box and clicking on the Predict button. What happens?

For now, our app doesn’t do much it just prints whatever has been typed in the box.

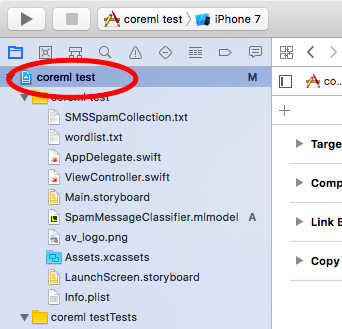

Adding a pre-trained model into your app

This bit is fairly easy,

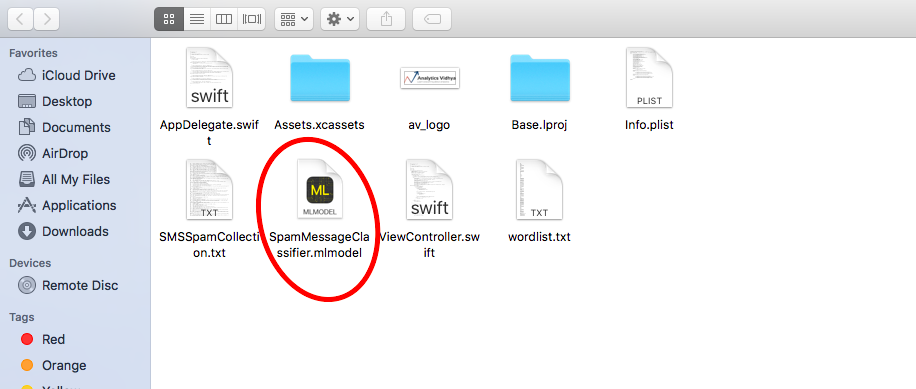

- Drag your .mlmodel file into the Xcode window in the project navigator pane.

- When you do that, a window will pop up with some options let the default options be and click “Finish”.

- When you drag a file like this into Xcode, it automatically creates references to the file in the project. This way you can easily access that file in your code.

Here is the whole process for reference :

Compiling the model

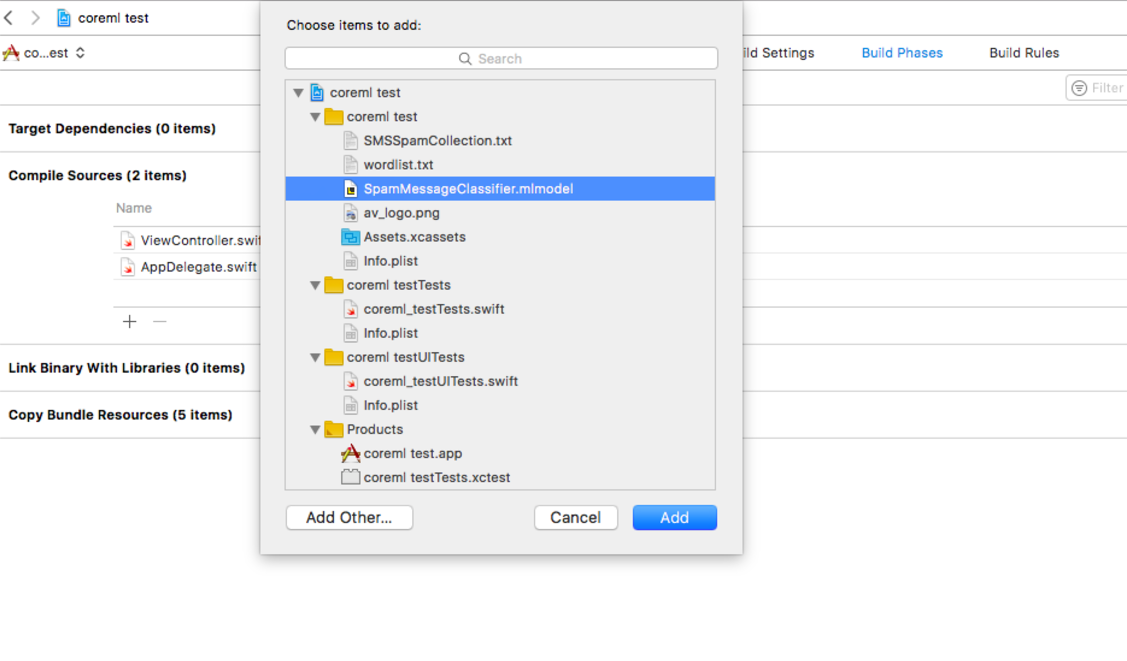

Before we can start making inferences from our model, we need to tell Xcode to compile the model during the build stage. For that follow the following steps:

- In the project navigator pane select the file with blue icon

- This will open project settings on the right-hand side. Click on Compile Sources and select the + icon.

- In the window that appears select the SpamMessageClassifier.mlmodel file and click Add.

Now every time we run our app, Xcode will compile our machine learning model so that it can be used for making predictions.

Using the model in code

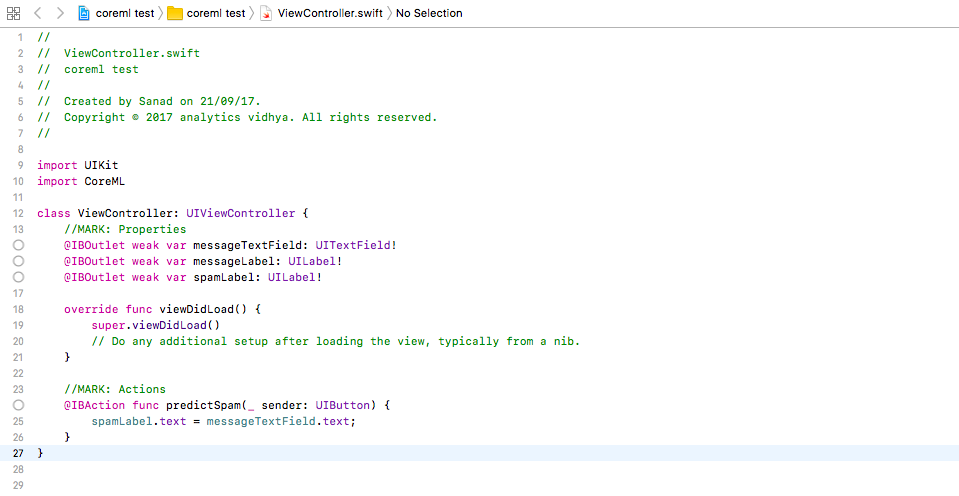

Any application that is to be developed for an Apple device is programmed in swift. For following this tutorial you don’t need to learn swift but if afterward it interests you and you want to go deeper, you can follow this tutorial.

- In the project navigator pane select ViewController.swift. This is the file that contains much of the code of that controls the functionality of our app.

- Look at the function predictSpam() at line 24 this is the function that will do most of the work. Delete the line 25 and add the following code to the function:

let enteredMessage = messageTextField.text! if (enteredMessage != ""){ spamLabel.text = "" } //Fetch tfidf representation of text let vec = tfidf(sms: enteredMessage) do { //Get prediction on the text let prediction = try SpamMessageClassifier().prediction(message: vec).spam_or_not print (prediction) if (prediction == "spam"){ spamLabel.text = "SPAM!" } else if(prediction == "ham"){ spamLabel.text = "NOT SPAM" } } catch{ spamLabel.text = "No Prediction" }

The above code checks whether the user has entered any message in the text box. If he has, it calculates the tfidf of the text by calling a function tfidf(). It then creates an object of our SpamMessageClassifier and then calls the .prediction() function. This is equivalent of .predict() in sklearn. It then displays an appropriate message based on the prediction.

But why is tfidf() required?

Remember that we trained our model based on tf-idf representation of text so our model expects the input in the same format. Once we get the message entered in the text box we are calling tfidf() function on it to do the same. Let’s write code for it, copy the following code just below the predictSpam() function:

//MARK: Functionality code func tfidf(sms: String) -> MLMultiArray{ //get path for files let wordsFile = Bundle.main.path(forResource: "wordlist", ofType: "txt") let smsFile = Bundle.main.path(forResource: "SMSSpamCollection", ofType: "txt") do { //read words file let wordsFileText = try String(contentsOfFile: wordsFile!, encoding: String.Encoding.utf8) var wordsData = wordsFileText.components(separatedBy: .newlines) wordsData.removeLast() // Trailing newline. //read spam collection file let smsFileText = try String(contentsOfFile: smsFile!, encoding: String.Encoding.utf8) var smsData = smsFileText.components(separatedBy: .newlines) smsData.removeLast() // Trailing newline. let wordsInMessage = sms.split(separator: " ") //create a multi-dimensional array let vectorized = try MLMultiArray(shape: [NSNumber(integerLiteral: wordsData.count)], dataType: MLMultiArrayDataType.double) for i in 0..<wordsData.count{ let word = wordsData[i] if sms.contains(word){ var wordCount = 0 for substr in wordsInMessage{ if substr.elementsEqual(word){ wordCount += 1 } } let tf = Double(wordCount) / Double(wordsInMessage.count) var docCount = 0 for sms in smsData{ if sms.contains(word) { docCount += 1 } } let idf = log(Double(smsData.count) / Double(docCount)) vectorized[i] = NSNumber(value: tf * idf) } else { vectorized[i] = 0.0 } } return vectorized } catch { return MLMultiArray() } }

The above code finds the tfidf representation of the message entered in the text box for that it reads the original dataset file SMSSpamCollection.txt and returns the same. Once you save the program and re-run the simulator, your app should be working fine now.

4. Pros and Cons of CoreML

Like every library in development, it has its pros and cons. Let us state them explicitly.

Pros

- Optimized for on-device performance, which minimizes memory footprint and power consumption.

- On-device means privacy of user data, you no longer need to send data to your servers to make predictions.

- On-Device means functional predictions even without an internet connection and a less response time to the user.

- It decides for itself whether to run the model on the CPU or the GPU (or both).

- Because it can use CPU, you can run it on the iOS simulator (which doesn’t support GPU).

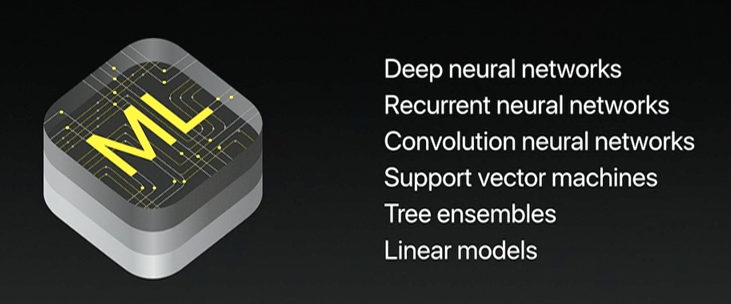

- It supports many models as it can import models from other popular machine learning frameworks :

- support vector machines (SVM)

- tree ensembles such as random forests and boosted trees

- linear regression and logistic regression

- neural networks: feed-forward, convolutional, recurrent

Cons

- Native support for Supervised models only doesn’t have support for unsupervised or reinforcement learning.

- No training on the device, only inference(prediction).

- If CoreML does not support a certain layer type, you can’t use it. Currently impossible to extend Core ML with your own layer types.

- Core ML conversion tools only support specific versions of a limited number of training tools (no tensorflow, what??)

- You cannot look at the output produced by intermediate layers, you only get the prediction.

- Only supports regression & classification (clustering, ranking, dimensionality reduction, etc. are not supported)

End Notes

In this article, we learned more about CoreML and its application in building a machine learning app for iPhone. CoreML is a relatively new library and hence has its own share of pros and cons. A very useful feature provided here is it runs on the device locally thus giving more speed and providing data privacy. At the same time, it can’t be thought of as a full-fledged data scientist friendly library yet. We will have to wait and see how does it evolve in the coming releases.

For those of you who got stuck at some step, all of the code for this article is available on GitHub.

Also, if you want to explore CoreML in more depth these are some of the resources:

- CoreML Documentation

- WWDC 2017 Video Session – Core ML in depth

- Introduction to Core ML: Building a Simple Image Recognition App