This article was published as a part of the Data Science Blogathon.

Introduction

AWS Lambda is a serverless computing service that lets you run code in response to events while having the underlying compute resources managed for you automatically. You may use Lambda to execute code for almost any kind of application or backend service with no administration required.

Here are a few of AWS Lambda’s salient attributes:

Event-driven: Lambda functions are activated in response to specific events, such as HTTP requests, database changes, or the arrival of messages in queues. Building event-driven systems and microservices that autonomously scale up or down in response to demand is now simple.

Scalable: Lambda automatically scales your applications in response to incoming request volume. Planning for capacity or overprovisioning resources are not issues.

Adaptable: it works with many different programming languages, including Node.js, Python, C#, Go, and others. Additional AWS services that you may leverage with Lambda include Amazon S3, Amazon DynamoDB, and Amazon Kinesis.

Simple to use: By using Lambda, you can concentrate on developing code rather than worrying about the infrastructure that supports it. There are no servers to manage, and it is quick and simple to deploy and update your code.

The following are some typical uses for AWS Lambda:

- backend logic for web and mobile applications is being run

- processing streams of data from log files or sensors

- the use of machine learning models

- workflow and business process automation

- construction of serverless applications

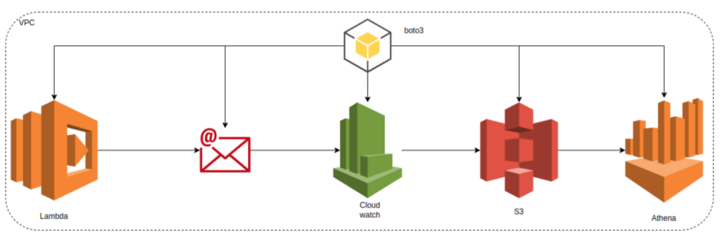

The focus of this demonstration is to showcase how to develop an AWS lambda function that sends emails on demand, capture the logs onto AWS cloud watch after delivering the email, analyze the captured logs by writing the logs onto S3 and using AWS Athena to query the logs using AWS SDK.

Let’s dive into our solution approach; the problem statement demands building an AWS lambda function that uses Amazon Simple Email Service (SES) to send emails on event triggers. We will leverage python to be our language of choice due to its simplicity and extensive data-centric features.

Lambda Function to Send an Email:

We will define a send_email() a function that uses the boto3 library to create an SES client and send an email with the specified recipient, subject, and body.

import boto3

def send_email(recipient, subject, body):

# Create an SES client

ses = boto3.client('ses')

# Set the parameters for the email

params = {

'Destination': {

'ToAddresses': [recipient]

},

'Message': {

'Body': {

'Text': {

'Charset': 'UTF-8',

'Data': body

}

},

'Subject': {

'Charset': 'UTF-8',

'Data': subject

}

},

'Source': '[email protected]'

}

# Send the email

ses.send_email(**params)

Now, we will define our handler() the function which is the entry point for the Lambda function and is triggered by the specified event. In our use case, the event is expected to contain the email’s recipient, subject, and body as fields.

def handler(event, context):

recipient = event['recipient']

subject = event['subject']

body = event['body']

send_email(recipient, subject, body)

To trigger this function, we will need to set up an SES email address or domain and verify the recipient’s email address.

Setting up an Amazon Simple Email Service (SES) email address or domain and verifying the recipient’s email address can be achieved by navigating to the AWS Management SES console, clicking Email Addresses in the left pane, Click the “Verify a New Email Address” button, Enter the email address of choice and completing next steps.

Analyzing the Logs on Athena

AWS Lambda automatically logs all function invocations and includes the logs in Amazon CloudWatch Logs. These logs can be used to troubleshoot issues with the functions and monitor the performance.

Let’s make use of Amazon SDK Boto3 with Python to write CloudWatch logs to an Amazon S3 bucket and analyze them using Amazon Athena:

Exporting

import boto3

# Set the names of the CloudWatch log group and S3 bucket

log_group_name = '/aws/lambda/my-function'

s3_bucket_name = 'my-log-bucket'

# Set the prefix for the S3 object key

s3_prefix = 'cloudwatch/logs'

# Create an S3 client

s3 = boto3.client('s3')

def export_logs_to_s3():

# Set the parameters for the CloudWatch export task

params = {

'logGroupName': log_group_name,

'fromTime': 1500000000000,

'to': 1600000000000,

'destination': s3_bucket_name,

'destinationPrefix': s3_prefix

}

# Create a CloudWatch Logs client

logs = boto3.client('logs')

# Create the export task

task_id = logs.create_export_task(**params)['taskId']

# Wait for the export task to complete

logs.describe_export_tasks(taskId=task_id)['exportTasks'][0]['status']['code'] == 'COMPLETED'

export_logs_to_s3()

Analyzing

def analyze_logs_with_athena():

# Set the parameters for the Athena query

params = {

'QueryString': 'SELECT * FROM cloudwatch_logs',

'ResultConfiguration': {

'OutputLocation': 's3://{}/athena/results'.format(s3_bucket_name)

}

}

# Create an Athena client

athena = boto3.client('athena')

# Start the query

query_id = athena.start_query_execution(**params)['QueryExecutionId']

# Wait for the query to complete

athena.get_query_execution(QueryExecutionId=query_id)['QueryExecution']['Status']['State'] == 'SUCCEEDED'

# Get the query results

results = athena.get_query_results(QueryExecutionId=query_id)

analyze_logs_with_athena()

This example exports the logs from the specified CloudWatch log group to an S3 bucket and then uses Athena to run a query on the logs and retrieve the query results.

Defining and Assigning IAM

Before running any of the above codes, we need to create some policies and permissions.

1. we will also need to grant the Lambda function permission to send emails using SES. To grant the AWS Lambda function permission to send emails using Amazon Simple Email Service (SES), the IAM policy must be attached to the IAM role associated with the function.

Example IAM policy that allows the Lambda function to send emails using SES; add this policy as an inline policy to your lambda role:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "ses:SendEmail",

"Resource": "*"

}

]

}

2. We need to set up an S3 bucket and grant the necessary permissions to the IAM role that is associated with the Lambda function. We also need to create an Athena database and table that maps to your CloudWatch logs in S3.

Create a bucket from AWS Management Console. Once the bucket has been created, you can grant permissions to the IAM role that is associated with your Lambda function by attaching an IAM policy to the role.

Here is a sample IAM policy that allows the Lambda function to read and write objects in the S3 bucket:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::my-bucket",

"arn:aws:s3:::my-bucket/*"

]

}

]

}

Attach this policy to the IAM role as an inline policy to the lambda role.

The showcased JSON are basic Configurations that are limited to the use case at hand; they can be expanded and integrated with a vast suite of AWS services.

Conclusion

AWS Lambda is a serverless computing service that runs on demand to justify the requests made triggered events. Serverless is catching up in the computing domain, and the adoption is exploding yearly. Understanding how Lambda works and how to develop it is a critical skill.

IAM enables you to establish and manage AWS users and groups and control who has access to AWS resources. IAM policies offer a centralized and flexible approach for managing access to your AWS resources, which is one of their key benefits. You can specify who has access to what resources and under what circumstances using IAM policies. This assists you in enforcing the security and compliance policies of your company and safeguarding your resources from illegal access.

Things to keep in mind before developing and architecting a performant AWS lambda solution:

- Use the appropriate memory size

- Use the appropriate timeout value

- Enable compression

- Use layers

- Use environment variables

- Use versioning and aliases

This blog post demonstrates,

- How to build an AWS lambda that sends simple emails in response to events.

- Design and integrate IAM policies with service roles to permit read and write access.

- How to export lambda generated logs from AWS cloud watch and ingest them into S3 for analysis.

- Using AWS Athena to query and analyze the logs from S3.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.