Introduction

We basically train machines so as to include some kind of automation in it. In machine learning, we use various kinds of algorithms to allow machines to learn the relationships within the data provided and make predictions using them. So, the kind of model prediction where we need the predicted output is a continuous numerical value, it is called a regression problem.

Regression analysis convolves around simple algorithms, which are often used in finance, investing, and others, and establishes the relationship between a single dependent variable dependent on several independent ones. For example, predicting house price or salary of an employee, etc are the most common regression problems.

Table of contents

Linear ML algorithms

Linear Regression

Ridge Regression-The L2 Norm

Even though coefficient shrinkage happens here, they aren’t completely put down to zero. Hence, your final model will still include all of it.

Lasso Regression -The L1 Norm

Both lasso and ridge are regularisation methods

Let us go through some examples :

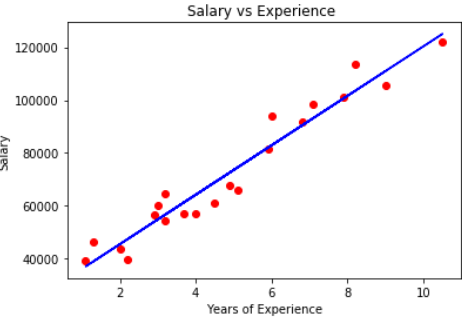

Suppose a data with years of experience and salary of different employees. Our aim is to create a model which predicts the salary of the employee based on the year of experience. Since it contains one independent and one dependent variable we can use simple linear regression for this problem.

Non-Linear ML algorithms

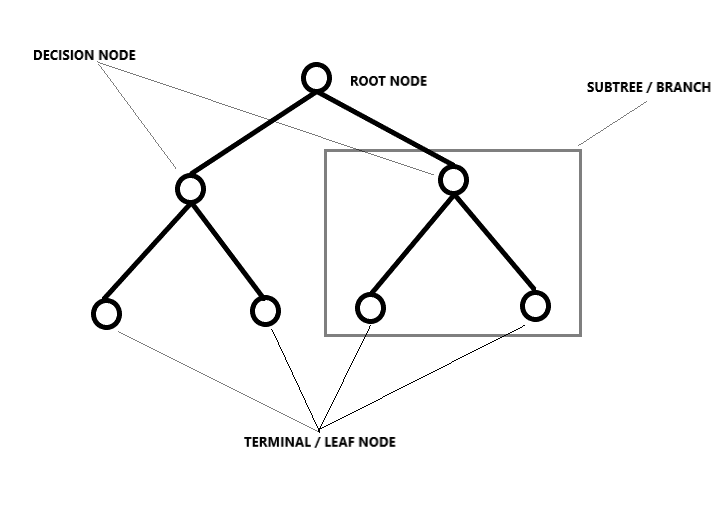

Decision Tree Regression

Let us take a case of house price prediction, given a set of 13 features and around 500 rows, here you need to predict the price for the house. Since here you have a considerable number of samples, you have to go for trees or other methods to predict values.

Random Forest

– Pick K random data points from the training set.

– Build a decision tree associated with these data points

– Choose the number of trees we need to build and repeat the above steps(provided as argument)

– For a new data point, make each of the trees predict values of the dependent variable for the input given.

This is similar to guessing the number of balls in a box. Let us assume we randomly note the prediction values given by many people, and then calculate the average to make a decision on the number of balls in the box. Random forest is a model that uses multiple decision trees, which we know, but since it has a lot of trees, it also requires a high time for training also computational power, which is still a drawback.

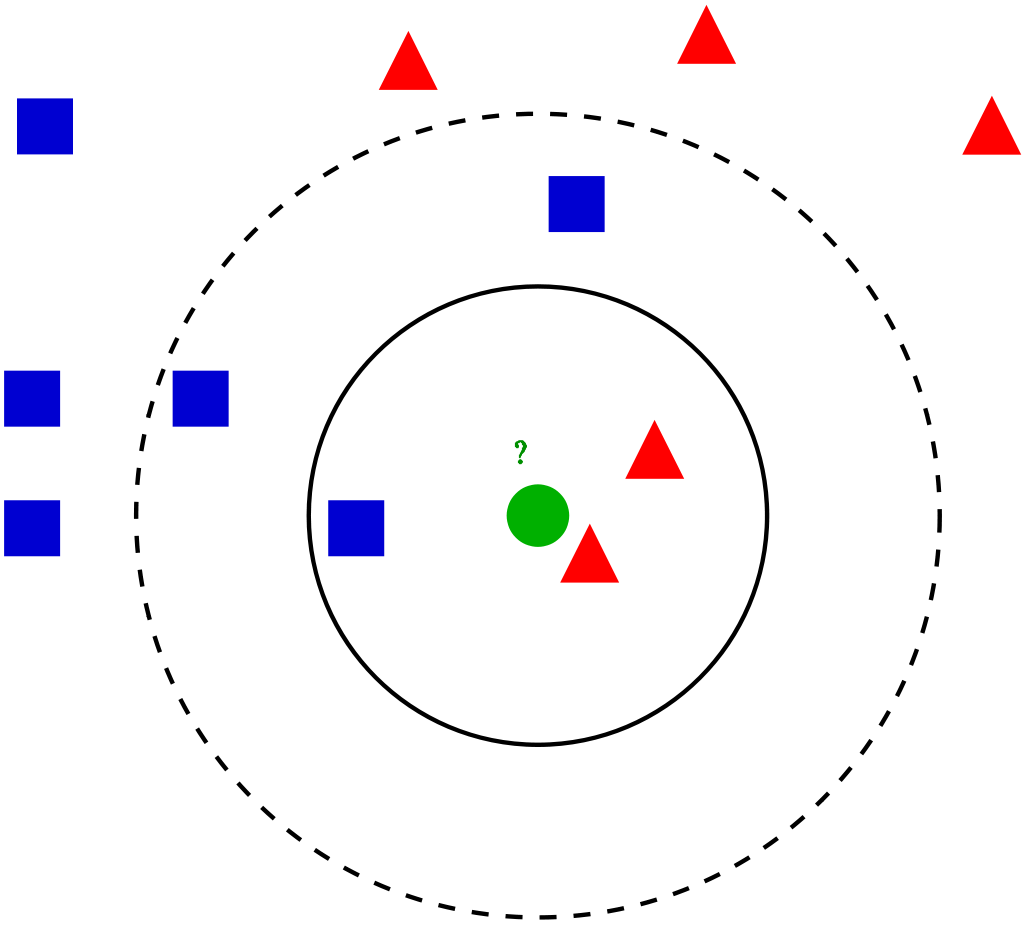

K Nearest Neighbors(KNN model)

The method to find the value can be given as an argument, of which the default value is “Minkowski” -a combination of “euclidean” and “manhattan” distances.

Predictions can be slow when the data is large and of poor quality. Since the prediction needs to take into account all the data points, the model will take up more space when training.

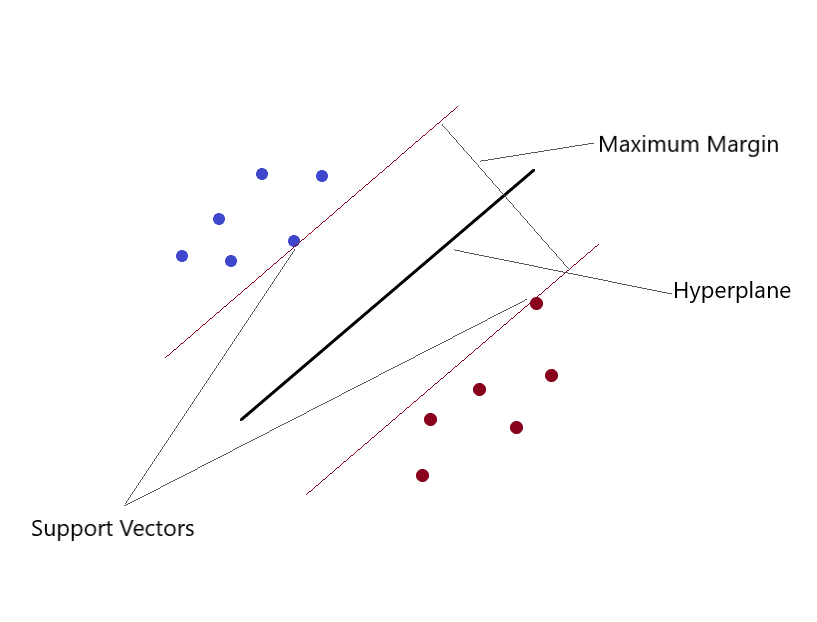

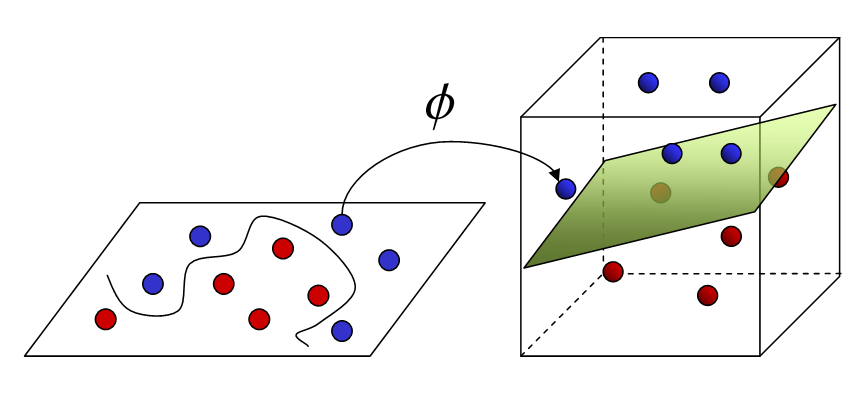

Support Vector Machines(SVM)

If training data is much larger than the number of features, KNN is better than SVM. SVM outperforms KNN when there are larger features and lesser training data.

Well, we have come to an end of this article, we have discussed the kinds of regression algorithms(theory) in brief. This is Surabhi, I am B.Tech Undergrad. Do check out my Linkedin profile and get connected. Hope you enjoyed reading this. Thank you.

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.

Conclusion

In summary, knowing about regression algorithms is essential in machine learning. Linear regression is like the basic building block, and Ridge/Lasso helps with some technical stuff. Other cool tools like Decision Trees, Random Forest, KNN, and SVM make understanding and predicting more complex things possible. It’s like having a toolbox for different jobs in machine learning!

Frequently Asked Questions

Regression is a machine learning task that aims to predict a numerical value based on input data. It’s like guessing a number on a scale. On the other hand, classification is about expecting which category or group something belongs to, like sorting things into different buckets.

Imagine predicting the price of a house based on factors like size, location, and number of bedrooms. That’s a classic example of regression in machine learning. You’re trying to estimate a specific value (the price) using various input features

Regression is used in many real-world scenarios. For instance, it helps predict stock prices, sales trends, or weather forecasts. In essence, regression in machine learning comes in handy when predicting a numerical outcome.