Stability AI, the pioneering company in stable diffusion technology, is making waves in the realm of language models with its latest release, Stable LM 2 1.6B. As the company grapples with reported financial troubles, this strategic shift towards language models could be a game-changer. In this article, we delve into the key features, implications, and the company’s journey leading up to this transformative release.

Also Read: World’s Most Powerful Supercomputer Achieves 1 Trillion Parameter LLM Run

A Shift in Focus

Stability AI has been steering towards language models, evident from recent releases like StableLM Zephyr 3B and the initial StableLM nine months ago. This move aligns with the industry trend of embracing small language models (SLMs). However, this shift appears to be more than just a technological evolution; it might be a strategic response to financial pressures and potential acquisition rumors.

Unveiling Stable LM 2 1.6B

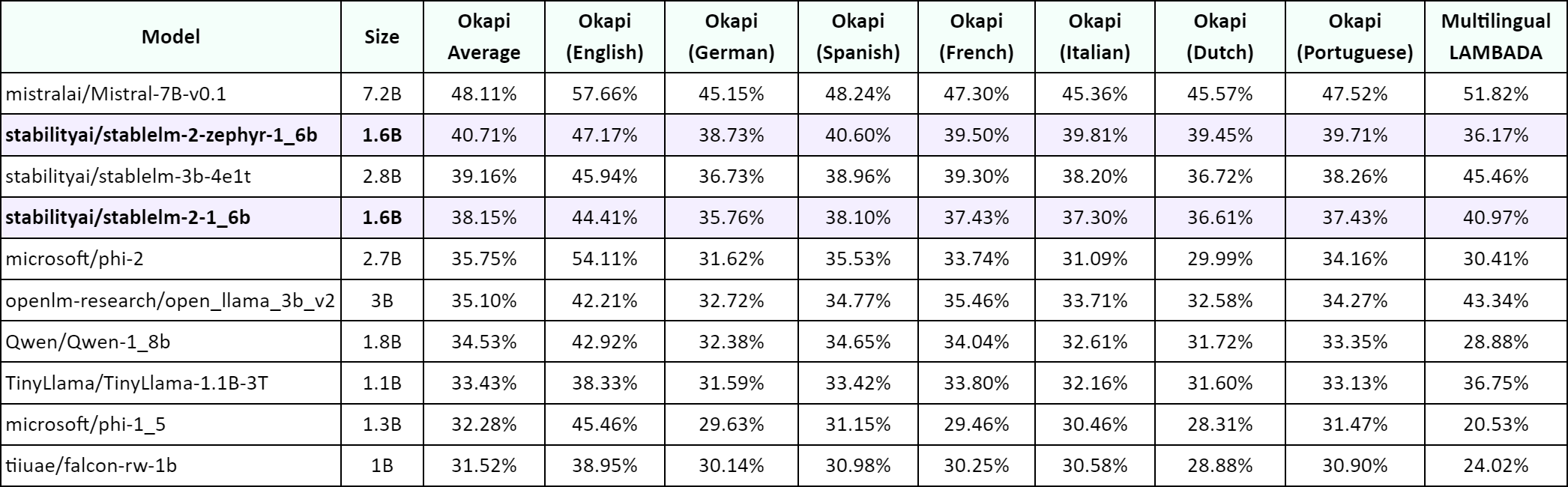

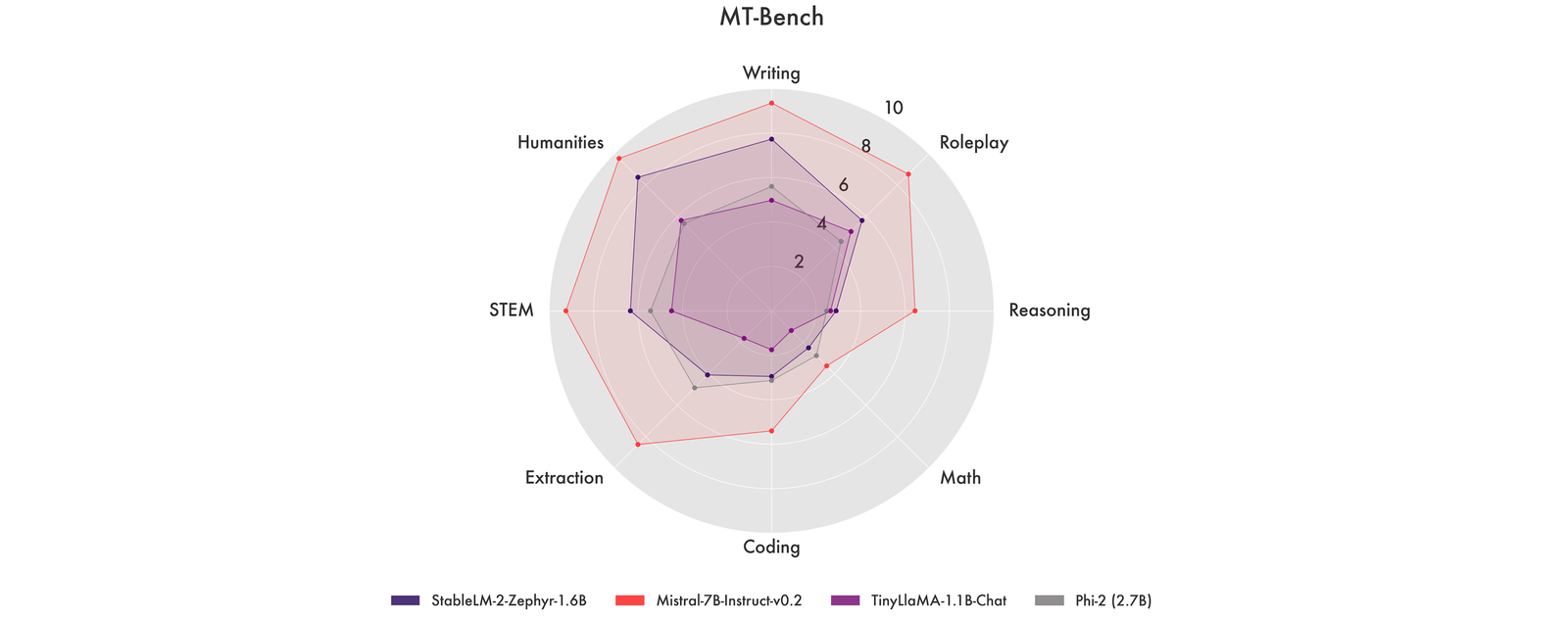

The spotlight is on Stable LM 2 1.6B, a compact yet potent language model designed to overcome hardware barriers and encourage wider developer participation. Trained on two trillion tokens across seven languages, including English, Spanish, and French, Stability’s latest model outperforms its competitors with under 2 billion parameters. This includes Microsoft’s Phi-1.5, TinyLlama 1.1B, and Falcon 1B.

Also Read: Apple Secretly Launches Its First Open-Source LLM, Ferret

Bridging the Gap with Transparency

Stability AI emphasizes transparency, providing complete details on the model’s training process and data specifics. The company introduces not only the base model but also an instruction-tuned version. Moreover, it releases the final pre-training checkpoint, along with optimizer states, facilitating a smoother transition for developers to fine-tune and experiment with the model.

Also Read: Casper Labs and IBM Develop Blockchain-based Solution for AI Transparency

The Accessibility Factor

A noteworthy aspect of Stable LM 2 1.6B is its compatibility with low-end devices, challenging the conventional belief that larger models equate to better performance. Despite its smaller scale, this model competes admirably with its larger counterparts, including Stability AI’s own 3 billion parameter model. The release underscores a broader industry trend—making AI technology accessible and practical for a wider array of devices and applications.

Cautionary Notes and Future Prospects

While Stability LM 2 1.6B shines in various benchmarks, the company acknowledges its limitations, such as an increased risk of hallucinations and potential toxic language output due to its size. However, this doesn’t overshadow the potential impact on the generative AI ecosystem, lowering barriers for developers and fostering innovation.

Our Say

Stability AI’s move towards language models, exemplified by Stable LM 2 1.6B, reflects not only technological prowess but also a strategic pivot in the face of financial challenges. The model’s transparency, accessibility, and competitive performance position Stability AI as a key player in the evolving landscape of small language models. As the industry witnesses this shift, it opens new possibilities for developers and signifies a step towards democratizing generative AI.

Follow us on Google News to stay updated with the latest innovations in the world of AI, Data Science, & GenAI.